New report assesses progress and risks of artificial intelligence Brown University

And even in cases where children aren’t physically harmed, the use of children’s faces in AI-generated images presents new challenges for protecting children’s online privacy and digital safety. Many of these new weapons pose major risks to civilians on the ground, but the danger becomes amplified when autonomous weapons fall into the wrong hands. Hackers have mastered various types of cyber attacks, so it’s not hard to imagine a malicious actor infiltrating autonomous weapons and instigating absolute armageddon. Along with technologists, journalists and political figures, even religious leaders are sounding the alarm on AI’s potential pitfalls. In a 2023 Vatican meeting and in his message for the 2024 World Day of Peace, Pope Francis called for nations to create and adopt a binding international treaty that regulates the development and use of AI.

IBES at 10: A decade of innovative research and teaching in environment and society

Businesses of all sizes have found great benefits from utilizing AI, and consumers across the globe use it in their daily lives. Although legal regulations mean certain AI technologies could eventually be banned, it doesn’t prevent societies from exploring the field. This prediction has come to fruition in the form of Lethal Autonomous Weapon Systems, which locate and destroy targets on their own while abiding by few regulations. Because of the proliferation of potent and complex weapons, some of the world’s most powerful nations have given in to anxieties and contributed to a tech cold war. The narrow views of individuals have culminated in an AI industry that leaves out a range of perspectives. According to UNESCO, only 100 of the world’s 7,000 natural languages have been used to train top chatbots.

As AI becomes more commonplace at companies, it may decrease available jobs, since AI can easily handle repetitive tasks that were previously done by workers. Even the most interesting job in the world has its share of mundane or repetitive work. This could be things like entering and analyzing data, generating reports, verifying information, and the like. Using an AI program can save humans from the boredom of repetitive tasks, and save their energy for work that requires more creative energy. If AI algorithms are biased or used in a malicious manner — such as in the form of deliberate disinformation campaigns or autonomous lethal weapons — they could cause significant harm toward humans.

Skill loss in humans

Researchers and developers must prioritize the ethical implications of AI technologies to avoid negative societal impacts. While the European Union already has rigorous data-privacy laws and the European Commission is considering a formal regulatory framework for ethical use of AI, the U.S. government has historically been late when it comes to tech regulation. Health care experts see many possible uses for AI, including with billing and processing necessary paperwork. And medical professionals expect that the biggest, most immediate impact will be in analysis of data, imaging, and diagnosis. Imagine, they say, having the ability to bring all of the medical knowledge available on a disease to any given treatment decision.

Millions of workers are also juggling caregiving. Employers need to rethink.

- Although AI can and already is being used to help organizations develop more sustainable practices, some experts have expressed concerns that AI’s energy needs could hurt more than help sustainability efforts, particularly in the short term.

- As a result, bad actors have another avenue for sharing misinformation and war propaganda, creating a nightmare scenario where it can be nearly impossible to distinguish between credible and faulty news.

- You might think that you do not care who knows your movements, after all you have nothing to hide.

- What if, for example, the AI in a health care system makes a false diagnosis, or a loan is unfairly denied by an AI algorithm?

In fact, the White House Office of Science and Technology Policy (OSTP) published the AI Bill of Rights in 2022, a document outlining to help responsibly guide AI use and development. Additionally, President Joe Biden issued an executive order in 2023 requiring federal agencies to develop new rules and guidelines for AI safety and security. There also comes a worry that AI will progress in intelligence so rapidly that it will become sentient, and act beyond humans’ control — possibly in a malicious manner. Alleged reports of adp run 2020 this sentience have already been occurring, with one popular account being from a former Google engineer who stated the AI chatbot LaMDA was sentient and speaking to him just as a person would.

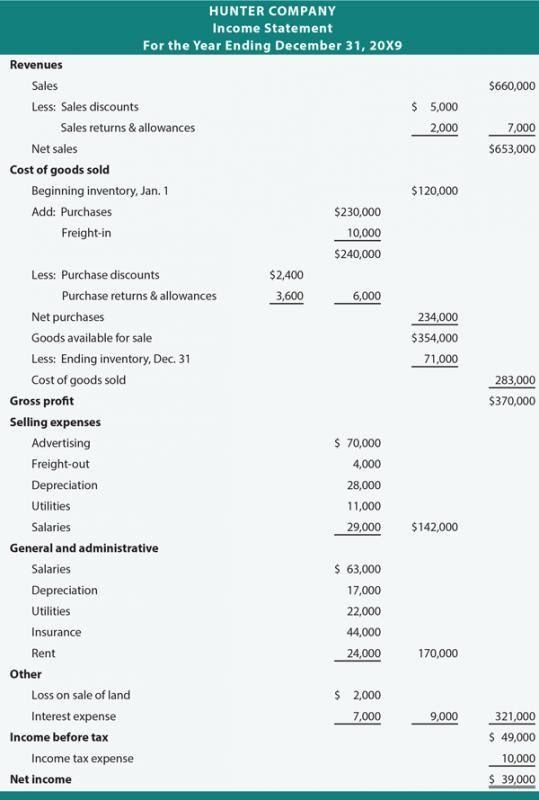

Substantial advances in language processing, computer vision and pattern recognition mean that AI is touching people’s lives on a daily basis — from helping people to choose a movie to aiding in medical diagnoses. With that success, however, comes a renewed urgency to understand and mitigate the risks and downsides of AI-driven systems, such as algorithmic discrimination or use of AI for deliberate deception. Computer scientists must sales returns and allowances work with experts in the social sciences and law to assure that the pitfalls of AI are minimized. Many artificial intelligence models are developed by training on large datasets.

Leaders could even make AI a part of their company culture and routine business discussions, establishing standards to determine acceptable AI technologies. In fact, AI algorithms can help investors make smarter and more informed decisions on the market. But finance organizations need to make sure they understand their AI algorithms and a small-business guide to common sources of capital how those algorithms make decisions. Companies should consider whether AI raises or lowers their confidence before introducing the technology to avoid stoking fears among investors and creating financial chaos. A 2024 AvePoint survey found that the top concern among companies is data privacy and security. And businesses may have good reason to be hesitant, considering the large amounts of data concentrated in AI tools and the lack of regulation regarding this information.

Artificial Intelligence is a branch of computer science dedicated to creating computers and programs that can replicate human thinking. Some AI programs can learn from their past by analyzing complex sets of data and improve their performance without the help of humans to refine their programming. AI (artificial intelligence) describes a machine’s ability to perform tasks and mimic intelligence at a similar level as humans.

With the secrecy and mystique surrounding the latest rollouts, it’s difficult to know for sure. From health care and finance to agriculture and manufacturing, AI may be transforming the workforce from top to bottom. Here are five examples of companies—all in different sectors—that are using AI in new ways.

Similarly, AI itself does not have any human emotions or judgment, making it a useful tool in a variety of circumstances. For example, AI-enabled customer service chatbots won’t get flustered, pass judgment or become argumentative when dealing with angry or confused customers. That can help users resolve problems or get what they need more easily with AI than with humans, Kim said. To deliver such accuracy, AI models must be built on good algorithms that are free from unintended bias, trained on enough high-quality data and monitored to prevent drift. But when asked if AI will be good or bad for them personally, only 32% said it will make things better, and 22% said it would make things worse.